DEVELOPING PHYSICS – BASED VR HANDS IN UNITY

Written by Pierce McBride

At Amebous Labs, I certainly wasn’t the only person who was excited to play Half-Life: Alyx last year. A few of us were eager to try the game when it came out, and I liked it enough to write about it. I liked a lot of things about the game, a few I didn’t, but more importantly for this piece, Alyx exemplifies what excites me about VR. It’s not the headset; surprisingly, it’s the hands.

What excites me about VR sometimes is not the headset, but the hands.

Half-Life: Alyx

More than most other virtual reality (VR) games, Alyx makes me use my hands in a way that feels natural. I can push, pull, grab, and throw objects. Sometimes I’m pulling wooden planks out of the way in a doorframe. Sometimes I’m searching lockers for a few more pieces of ammunition. That feels revelatory, but at least at the time, I wasn’t entirely sure how they did it. I’ve seen the way other games often implement hands, and I had implemented a few myself. Simple implementations usually result in something…a little less exciting.

Skyrim VR

Alyx set a new bar for object interaction, but implementing something like it took some experimentation, which I’ll show you below.

For this quick sample, I’ll be using Unity 2020.2.2f1. It’s the most recent version as of the time of writing, but this implementation should work for any version of Unity that supports VR. In addition, I’m using Unity’s XR package and project template as opposed to any hardware-specific SDK. This means it should work with any hardware Unity XR supports automatically, and we’ll have access to some good sample assets for the controllers. However, for this sample implementation, you’ll only need the XR Plugin Management and whichever hardware plugin you intend on using (Oculus XR Plugin / Windows XR plugin / OpenVR XR Plugin). I use Oculus Link, and a Quest, Vive, and Index. Implementation should be the same, but you may need to use a different SDK to track the controllers’ position. The OpenVR XR plugin should eventually fill this gap, but as of now, it appears to be a preliminary release.

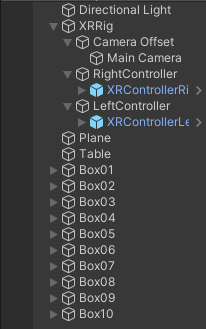

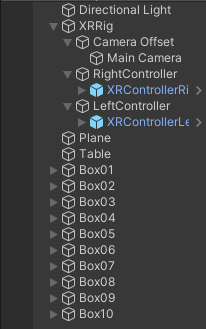

To start, prepare a project for XR following Unity’s instructions here, or create a new project using the VR template. If you start from the template, you’ll have a sample scene with an XR Rig already, and you can skip ahead. If not, open a new scene, select GameObject – > XR – > Convert Main Camera to XR Rig. That will give you the Main Camera setup to support VR. Make two-child GameObjects under XR Rig, attach a TrackedPoseDriver component to each. Make sure both TrackedPoseDriver’s Device is set to “Generic XR Controller” and change “Pose Source” on one to “Left Controller,” the other “Right Controller.” Lastly, assign some kind of mesh as a child of each controller GameObject so you can see your hands in the headset. I also created a box to act as a table and a bunch of small boxes with Rigidbody components so that my hands have something to collide with.

Once you’ve reached that point, what you should see when you hit play are your in-game hands matching the position of your actual hands. However, our virtual hands don’t have colliders and are not set up to properly interact with Physx (Unity’s built-in physics engine). Attach colliders to your hands and attach a Rigidbody to the GameObjects that have the TrackedPoseDriver component. Set “Is Kinematic” on both Rigidbodies to true and press play. You should see something like this.

The boxes with Rigidbodies do react to my hands, but the interaction is one-way, and my hands pass right through colliders without a Rigidbody. Everything feels weightless, and my hands feel more like ghost hands than real, physical hands. That’s only the start of the limitations of the approach as well; Kinematic Rigidbody colliders have a lot of limitations that you’d start to uncover once you begin to make objects you want your player to hold, grab, pull or otherwise interact with. Let’s try to fix that.

First, because TrackedPoseDriver works on the Transform it’s attached to, we’ll need to separate the TrackedPoseDriver from the Rigidbody hand. Otherwise, the Rigidbody’s velocity and the TrackedPoseDriver will fight each other for who changes the GameObject’s position. Create two new GameObjects for the TrackedPoseDrivers, remove the TrackedPoseDrivers from the GameObjects with the Rigidbodies, and attach the TrackedPoseDrivers to the newly created GameObjects. I called my new GameObjects Right and Left Controller, and I renamed my hands to Right and Left Hand.

Create a “PhysicsHand” script. The hand script will only do two things, match the velocity and angular velocity of the TrackedPoseDriver to the hand Rigibodies. Let’s start with position. Usually, it’s recommended that you not directly overwrite the velocity of a Rigidbody because it leads to unpredictable behavior. However, we need to do that here because we’re indirectly mapping the velocity of the player’s real hands to the VR Rigidbody hands. Thankfully, just matching velocity is not all that hard.

public class PhysicsHand : MonoBehaviour

{

public Transform trackedTransform = null;

public Rigidbody body = null;

public float positionStrength = 15;

void FixedUpdate()

{

var vel = (trackedTransform.position - body.position).normalized * positionStrength * Vector3.Distance(trackedTransform.position, body.position);

body.velocity = vel;

}

}Attach this component to the same one as the Rigidbodies, assign the appropriate Rigidbody to each, and assign the Right and Left Controller Transform reference to each as well. Make sure you turn off “Is Kinematic” on the Rigidbodies hit play. You should see something like this.

With this, we have movement! The hands track the controllers’ position but not the rotation, so it kind of feels like they’re floating in space. But they do respond to all collisions, and they cannot go into static colliders. What we’re doing here is getting the normalized Vector towards the tracked position by subtracting it from the Rigibody position. We’re adding a little bit of extra oomph to the tracking with position strength, and we’re weighing the velocity by the distance from the tracked position. One extra note, position strength works well at 15 if the hand’s mass is set to 1; if you make heavier or lighter hands, you’ll likely need to tune that number a little. Lastly, we could try other methods, like attempting to calculate the hands’ actual velocity from their current and previous positions. We could even use a joint between the Transform track and the controllers, but I personally find custom code easier to work with.

Next, we’ll do rotation, and unfortunately, the best solution I’ve found is more complex. In real-world engineering, one common way to iteratively change one value to match another is an algorithm called a PD controller or proportional derivative controller. Through some trial and error and following along with the implementations shown in this blog post, we can write another block of code that calculates how much torque to apply to the Rigidbodies to iteratively move them towards the hand’s rotation.

public class PhysicsHand : MonoBehaviour { public Transform trackedTransform = null; public Rigidbody body = null;public float positionStrength = 20; public float rotationStrength = 30;

void FixedUpdate()

{

var vel = (trackedTransform.position - body.position).normalized * positionStrength * Vector3.Distance(trackedTransform.position, body.position);

body.velocity = vel;float kp = (6f * rotationStrength) * (6f * rotationStrength) * 0.25f;

float kd = 4.5f * rotationStrength;

Vector3 x;

float xMag;

Quaternion q = trackedTransform.rotation * Quaternion.Inverse(transform.rotation);

q.ToAngleAxis(out xMag, out x);

x.Normalize();

x *= Mathf.Deg2Rad;

Vector3 pidv = kp * x * xMag - kd * body.angularVelocity;

Quaternion rotInertia2World = body.inertiaTensorRotation * transform.rotation;

pidv = Quaternion.Inverse(rotInertia2World) * pidv;

pidv.Scale(body.inertiaTensor);

pidv = rotInertia2World * pidv;

body.AddTorque(pidv);

}

}

With the exception of the KP and KD values, which I simplified the calculation for, this code is largely the same as the original author wrote it. The author also has implementations for using a PD controller to track position, but I had trouble fine-tuning their implementation. At Amebous Labs, we use the simpler method shown here, but using a PD controller is likely possible with more work.

Now, if you run this code, you’ll find that it mostly works, but the Rigidbody vibrates at certain angles. This problem plagues PD controllers: without good tuning, they oscillate around their target value. We could spend time fine-tuning our PD controller, but I find it easier to simply assign Transform values once they’re below a certain threshold. In fact, I’d recommend doing that for both position and rotation, partially because you’ll eventually realize you’ll need to snap the Rigidbody back in case it gets stuck somewhere. Let’s just resolve all three cases at once.

public class PhysicsHand : MonoBehaviour

{

public Transform trackedTransform = null;

public Rigidbody body = null;

public float positionStrength = 20;

public float positionThreshold = 0.005f;

public float maxDistance = 1f;

public float rotationStrength = 30;

public float rotationThreshold = 10f;

void FixedUpdate()

{

var distance = Vector3.Distance(trackedTransform.position, body.position);

if (distance > maxDistance || distance < positionThreshold)

{

body.MovePosition(trackedTransform.position);

}

else

{

var vel = (trackedTransform.position - body.position).normalized * positionStrength * distance;

body.velocity = vel;

}

float angleDistance = Quaternion.Angle(body.rotation, trackedTransform.rotation);

if (angleDistance < rotationThreshold)

{

body.MoveRotation(trackedTransform.rotation);

}

else

{

float kp = (6f * rotationStrength) * (6f * rotationStrength) * 0.25f;

float kd = 4.5f * rotationStrength;

Vector3 x;

float xMag;

Quaternion q = trackedTransform.rotation * Quaternion.Inverse(transform.rotation);

q.ToAngleAxis(out xMag, out x);

x.Normalize();

x *= Mathf.Deg2Rad;

Vector3 pidv = kp * x * xMag - kd * body.angularVelocity;

Quaternion rotInertia2World = body.inertiaTensorRotation * transform.rotation;

pidv = Quaternion.Inverse(rotInertia2World) * pidv;

pidv.Scale(body.inertiaTensor);

pidv = rotInertia2World * pidv;

body.AddTorque(pidv);

}

}

}

With that, our PhysicsHand is complete! Clearly, a lot more could go into this implementation, and this is only the first step when creating physics-based hands in VR. However, it’s the portion that I had a lot of trouble working out, and I hope it helps your VR development.

Written by Pierce McBride

If you found this blog helpful, please feel free to share and to follow us @LoamGame on Twitter!