UNITY UI REMOTE INPUT

Written by Pierce McBride

Throughout many VR (virtual reality) games and experiences, it’s sometimes necessary to have the player interact with a UI (user interface). In Unity, these are commonly world-space canvases where the player usually has some type of laser pointer, or they can directly touch the UI with their virtual hands. Like me, you may have in the past wanted to implement a system and ran into the problem of how can we determine what UI elements the player is pointing at, and how can they interact with those elements? This article will be a bit technical and assumes some intermediate knowledge of C#. I’ll also say now that the code in this article is heavily based on the XR Interaction Toolkit provided by Unity. I’ll point out where I took code from that package as it comes up, but the intent is for this code to work entirely independently from any specific hardware. If you want to interact with world-space UI with a world-space pointer and you know a little C#, read on.

Figure 1 – Cities VR (Fast Travel Games, 2022)

Figure 2 – Resident Evil 4 VR (Armature Studio, 2021)

Figure 3 – The Walking Dead: Saints and Sinners (Skydance Interactive, 2020)

Physics.Raycast to find out what the player is pointing at,” and unfortunately, you would be wrong like I was. That’s a little glib, but in essence, there are two problems with that approach:

- Unity uses a combination of the EventSystem, GraphicsRaycasters, and screen positions to determine UI input, so by default,

Physics.Raycastswould entirely miss the UI with its default configuration.

- Even if you attach colliders and find the GameObject hit with a UI component, you’d need to replicate a lot of code that Unity provides for free. Pointers don’t just click whatever component they’re over; they can also drag and scroll.

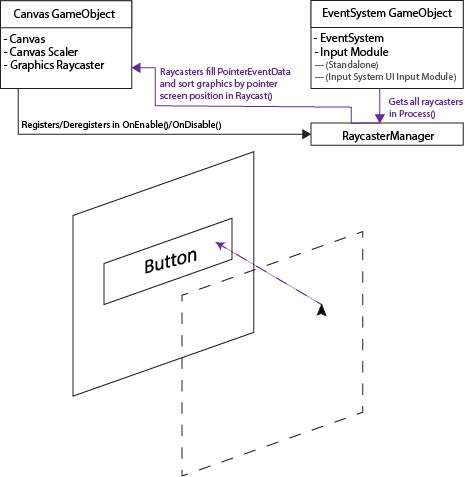

In order to explain the former problem, it’s best to make sure we both understand how the existing EventSystem works. By default, when a player moves a pointer (like a mouse) around or presses some input that we have mapped to UI input, like select or move, Unity picks up this input from an InputModule component attached to the same GameObject as the EventSystem. This is, by default, either the Standalone Input Module or the Input System UI Input Module. Both work the same way, but they use different input APIs.

Each frame, the EventSystem calls a method in the input module named Process(). In the standard implementations, the input module gets a reference to all enabled BaseRaycaster components from a RaycasterManager static class. By default, these are GraphicsRaycastersfor most games. For each of those raycasters, the input module called Raycast(PointerEventData eventData, List<RaycastResult> resultAppendList) takes a PointerEventData object and a list to append new graphics to. The raycaster sorts all the graphic’s objects on its canvas by a hierarchy that lines up with a raycast from the pointer screen position to the canvas and appends those graphics objects to the list. The input module then takes that list and processes any events like selecting, dragging, etc.

Figure 4 Event System Explanation Diagram

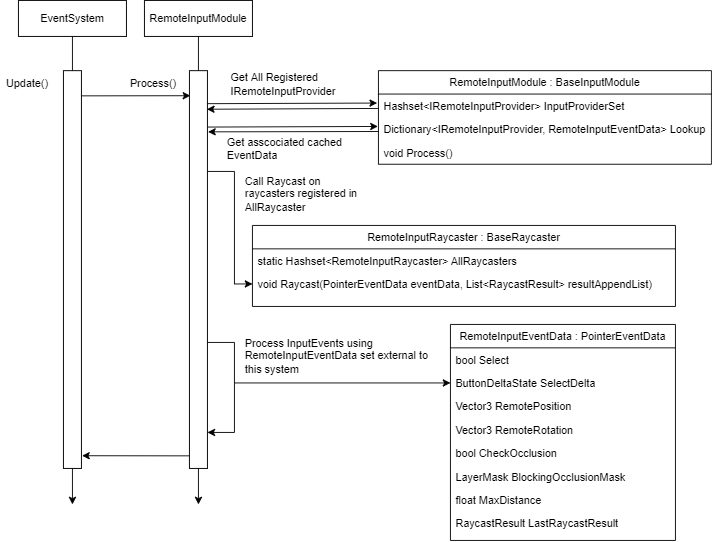

So how will these objects fit together? Instead of the process described above, we’ll replace the input module with a RemoteInputModule, each raycaster with a RemoteInputRaycaster, create a new kind of pointer data called RemoteInputPointerData, and finally make an interface for an IRemoteInputProvider. You’ll construct the interface yourself to fit the needs of your project, and its job will be to register itself with the input module, update a cached copy of RemoteInputPointerData each frame with its current transform position rotation, and set the Select state which we’ll use to actually select UI elements.

Each time the EventSystem calls Process() on our RemoteInputModule we’ll refer to a collection of registered IRemoteInputProvider and retrieve the corresponding RemoteInputEventData. InputProviderSet is a Hashset for fast lookup and no duplicate items. Lookup is a Dictionary so we can easily associate the providers with a cached event data. We cache event data so we can properly process UI interactions that take place over multiple frames, like drags. We also presume that each event data has already been updated with a new position, rotation, and selections state. Again, this is your job as a developer to define how that happens, but the RemoteInput package will come with one out of the box and a sample implementation that uses keyboard input that you can review.

Next, we need all the RemoteInputRaycaster. We could use Unity’s RaycastManager, iterate over it, and call Raycast() on all BaseRaycaster that we can cast to our inherited type, but to me, this sounds slightly less efficient than we want. We aren’t implementing any code that could support normal pointer events from a mouse or touch, so there’s not really any point in iterating over a list that could contain many elements we have to skip. Instead, I added a static HashSet to RemoteInputRaycaster that it registers to on Enable/Disable, just like the normal RaycastManager. But in this case, we can be sure each item is the right type, and we only iterate over items that we can use. We call Raycast(), which creates a new ray from the provider’s given position, rotation, and max length. It sorts all its canvas graphics components just like the regular raycaster.

Last, we take the list that all RemoteInputRaycaster have appended and process UI events. SelectDelta is more critical than SelectDown. Technically our input module only needs to know the delta state because most events are driven when select is pressed or released only. In fact, RemoteInputModule will set the value of SelectDelta to NoChange after it’s processed each event data. That way, it’s only ever pressed or released for exactly one provider.

Figure 5 Remote Input Process Event Diagram

For you, the most important code to review would be our RemoteInputSender because you should only need to replace your input module and all GraphicsRaycasters for this to work. Thankfully, beyond implementing the required properties on the interface, the minimum setup is quite simple.

void OnEnable()

{

ValidateProvider();

ValidatePresentation();

}

void OnDisable()

{

_cachedRemoteInputModule?.Deregister(this);

}

void Update()

{

if (!ValidateProvider())

return;

_cachedEventData = _cachedRemoteInputModule.GetRemoteInputEventData(this);

UpdateLine(_cachedEventData.LastRaycastResult);

_cachedEventData.UpdateFromRemote();

SelectDelta = ButtonDeltaState.NoChange;

if (ValidatePresentation())

UpdatePresentation();

}RemoteInputModule through the singleton reference EventSystem.current and registering ourselves with SetRegistration(this, true). Because we store registered providers in a Hashset, you can call this each time, and no duplicate entries will be added. Validating our presentation means updating the properties on our LineRenderer if it’s been set in the inspector.bool ValidatePresentation()

{

_lineRenderer = (_lineRenderer != null) ? _lineRenderer : GetComponent <linerenderer>();

if (_lineRenderer == null)

return false;

_lineRenderer.widthMultiplier = _lineWidth;

_lineRenderer.widthCurve = _widthCurve;

_lineRenderer.colorGradient = _gradient;

_lineRenderer.positionCount = _points.Length;

if (_cursorSprite != null &amp;&amp; _cursorMat == null)

{

_cursorMat = new Material(Shader.Find("Sprites/Default"));

_cursorMat.SetTexture("_MainTex", _cursorSprite.texture);

_cursorMat.renderQueue = 4000; // Set renderqueue so it renders above existing UI

// There's a known issue here where this cursor does NOT render above dropdown components.

// it's due to something in how dropdowns create a new canvas and manipulate its sorting order,

// and since we draw our cursor directly to the Graphics API we can't use CPU-based sorting layers

// if you have this issue, I recommend drawing the cursor as an unlit mesh instead

if (_cursorMesh == null)

_cursorMesh = Resources.GetBuiltinResource&lt;Mesh&gt;("Quad.fbx");

}

return true;

}RemoteInputSender will still work if no LineRenderer is added, but if you add one it’ll update it via the properties on the sender each frame. As an extra treat, I also added a simple “cursor” implementation that creates a cached quad, assigns a sprite material to it that uses a provided sprite and aligns it per-frame to the endpoint of the remote input ray. Take note of Resources.GetBuiltinResource("Quad.fbx") . This line gets the same file that’s used if you hit Create -> 3D Object -> Quad and works at runtime because it’s a part of the Resources API. Refer to this link for more details and other strings you can use to find the other built-in resources.

The two most important lines in Update are _cachedEventdata.UpdateFromRemote() and SelectDelta = ButtonDeltaState.NoChange. The first line will automatically set all properties in the event data object based on the properties implemented from the provider interface. As long as you call this method per frame and you write your properties correctly the provider will work. The second resets the SelectDelta property, which is used to determine if the remote provider just pressed or released select. The UI is built around input down and up events, so we need to mimic that behavior and make sure if SelectDelta changes in a frame, it only remains pressed or released for exactly 1 frame.

void UpdateLine(RaycastResult result)

{

_hasHit = result.isValid;

if (result.isValid)

{

_endpoint = result.worldPosition;

// result.worldNormal doesn't work properly, seems to always have the normal face directly up

// instead, we calculate the normal via the inverse of the forward vector on what we hit. Unity UI elements

// by default face away from the user, so we use that assumption to find the true "normal"

// If you use a curved UI canvas this likely will not work

_endpointNormal = result.gameObject.transform.forward * -1;

}

else

{

_endpoint = transform.position + transform.forward * _maxLength;

_endpointNormal = (transform.position - _endpoint).normalized;

}

_points[0] = transform.position;

_points[_points.Length - 1] = _endpoint;

}

void UpdatePresentation()

{

_lineRenderer.enabled = ValidRaycastHit;

if (!ValidRaycastHit)

return;

_lineRenderer.SetPositions(_points);

if (_cursorMesh != null &amp;amp;&amp;amp; _cursorMat != null)

{

_cursorMat.color = _gradient.Evaluate(1);

var matrix = Matrix4x4.TRS(_points[1], Quaternion.Euler(_endpointNormal), Vector3.one * _cursorScale);

Graphics.DrawMesh(_cursorMesh, matrix, _cursorMat, 0);

}

} RemoteInputSender. Because the LastRaycastResult is cached in the event data, we can use it to update our presentation efficiently. In most cases we likely just want to render a line from the provider to the UI that’s being used, so we use an array of Vector3 that’s of length 2 and update the first and last position with the provider position and raycast endpoint that the raycaster updated. There’s an issue currently where the world normal isn’t set properly, and since we use it with the cursor, we set it ourselves with the start and end point instead. When we update presentation, we set the positions of the line renderer, and we draw the cursor if we can. The cursor is drawn directly using the Graphics API, so if there’s no hit, it won’t be drawn and has no additional GameObject or component overhead.

I would encourage you to read the implementation in the repo, but at a high level, the diagrams and explanations above should be enough to use the system I’ve put together. I acknowledge that portions of the code use methods written for Unity’s XR Interaction Toolkit, but I prefer this implementation because it is more flexible and has no additional dependencies. I would expect most developers who need to solve this problem are working in XR, but if you need to use world space UIs and remotes in a non-XR game, this implementation would work just as well.

Figure 6 Non-VR Demo

Figure 7 VR Demo

Cheers, and good luck building your game ✌️

Written by Pierce McBride

THE DESIGN OF EVERYDAY VR THINGS

Written by Pierce McBride

If you read my last post on this blog, Developing Physics-Based VR Hands in Unity, you’d know that I think hands are the most interesting aspect of virtual reality (VR) game design. All the things we as humans do with our hands have existed in games, sure. Games have doors you can open, buttons you can press, and objects you can throw, for example. But these interactions have to be mediated by the mechanical objects we call controllers, keyboards, and mice. These objects force us to simplify what you, the player playing the game, must physically do to make your avatar do something in return. Opening a door or pressing a button becomes pressing X. Throwing a ball becomes flicking an analog stick.

Even if our doors are virtual, we still use them like real doors.

These work fine, but VR offers something more tactile, but therein lies the challenge, both for us at Amebous Labs as well as any developer working in VR. In VR, we have a 1-to-1 mapping of your physical hand’s position and rotation. We also have a pretty good approximation of your hand “gripping” in the grip or trigger buttons, depending on your platform. That’s pretty cool! It means we can make a virtual door that you physically grab and physically pull open, just like a real door. Now you might not need that “Press X to” prompt on the screen. Who needs help opening a door? As it turns out, a lot of people. Exchanging a controller for a hand just changed the kind of design we need to think about.

Call of Duty: Advanced Warfare

In fact, confusing doors is literally an issue so common in the broader field of design that it’s a joke unto itself. They’re called Norman Doors, named after Don Norman. Norman wrote a very influential book on a design called The Design of Everyday Things. The book is about usability and ergonomics, which are fields of study about how the shape, placement, color, and other factors of objects in our world teach us how to use them or how they work. One of the more famous examples of the confusing design is door handles. To Norman, the shape indicates not just how you grasp the door but also what direction it swings or slides. These factors are just as important in VR precisely because hands are how we use them. Even if our doors are virtual, we still use them like real doors. For example, in Boneworks, your VR body can collide with doors, but they often have you pull open doors. They get the handles right, and the shape suggests grasping and pulling. However, the doors sometimes swing inwards. That’s tough in VR because it’s hard to move your body around the door as you open it. The game is otherwise great! But I often accidentally bumped doors closed when opening them or trying to walk around them. In Loam, we’re planning on using sliding doors to alleviate this problem.

Door in Boneworks

Door in Loam

This kind of thinking really should impact any portion of VR design that involves the player. In Vacation Simulator, you can adjust the height of most tables, so they’re comfortable for how you’re playing. In Loam, we put the handle of the watering can on the back so you wouldn’t have to tilt your wrist as much as you play. We even picked a long, normally two-handed shovel and not a trowel because we don’t want to make players bend down repeatedly. This only scratches the surface of the field, and I’d recommend all designers, but especially VR designers, to look for solutions in the way actual objects solve similar problems.

Oh yeah, and read The Design of Everyday Things.

Written by Pierce McBride

DEVELOPING PHYSICS – BASED VR HANDS IN UNITY

Written by Pierce McBride

At Amebous Labs, I certainly wasn’t the only person who was excited to play Half-Life: Alyx last year. A few of us were eager to try the game when it came out, and I liked it enough to write about it. I liked a lot of things about the game, a few I didn’t, but more importantly for this piece, Alyx exemplifies what excites me about VR. It’s not the headset; surprisingly, it’s the hands.

What excites me about VR sometimes is not the headset, but the hands.

Half-Life: Alyx

More than most other virtual reality (VR) games, Alyx makes me use my hands in a way that feels natural. I can push, pull, grab, and throw objects. Sometimes I’m pulling wooden planks out of the way in a doorframe. Sometimes I’m searching lockers for a few more pieces of ammunition. That feels revelatory, but at least at the time, I wasn’t entirely sure how they did it. I’ve seen the way other games often implement hands, and I had implemented a few myself. Simple implementations usually result in something…a little less exciting.

Skyrim VR

Alyx set a new bar for object interaction, but implementing something like it took some experimentation, which I’ll show you below.

For this quick sample, I’ll be using Unity 2020.2.2f1. It’s the most recent version as of the time of writing, but this implementation should work for any version of Unity that supports VR. In addition, I’m using Unity’s XR package and project template as opposed to any hardware-specific SDK. This means it should work with any hardware Unity XR supports automatically, and we’ll have access to some good sample assets for the controllers. However, for this sample implementation, you’ll only need the XR Plugin Management and whichever hardware plugin you intend on using (Oculus XR Plugin / Windows XR plugin / OpenVR XR Plugin). I use Oculus Link, and a Quest, Vive, and Index. Implementation should be the same, but you may need to use a different SDK to track the controllers’ position. The OpenVR XR plugin should eventually fill this gap, but as of now, it appears to be a preliminary release.

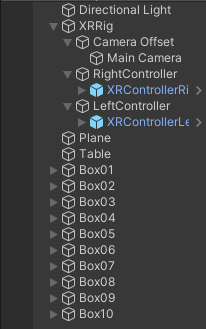

To start, prepare a project for XR following Unity’s instructions here, or create a new project using the VR template. If you start from the template, you’ll have a sample scene with an XR Rig already, and you can skip ahead. If not, open a new scene, select GameObject – > XR – > Convert Main Camera to XR Rig. That will give you the Main Camera setup to support VR. Make two-child GameObjects under XR Rig, attach a TrackedPoseDriver component to each. Make sure both TrackedPoseDriver’s Device is set to “Generic XR Controller” and change “Pose Source” on one to “Left Controller,” the other “Right Controller.” Lastly, assign some kind of mesh as a child of each controller GameObject so you can see your hands in the headset. I also created a box to act as a table and a bunch of small boxes with Rigidbody components so that my hands have something to collide with.

Once you’ve reached that point, what you should see when you hit play are your in-game hands matching the position of your actual hands. However, our virtual hands don’t have colliders and are not set up to properly interact with Physx (Unity’s built-in physics engine). Attach colliders to your hands and attach a Rigidbody to the GameObjects that have the TrackedPoseDriver component. Set “Is Kinematic” on both Rigidbodies to true and press play. You should see something like this.

The boxes with Rigidbodies do react to my hands, but the interaction is one-way, and my hands pass right through colliders without a Rigidbody. Everything feels weightless, and my hands feel more like ghost hands than real, physical hands. That’s only the start of the limitations of the approach as well; Kinematic Rigidbody colliders have a lot of limitations that you’d start to uncover once you begin to make objects you want your player to hold, grab, pull or otherwise interact with. Let’s try to fix that.

First, because TrackedPoseDriver works on the Transform it’s attached to, we’ll need to separate the TrackedPoseDriver from the Rigidbody hand. Otherwise, the Rigidbody’s velocity and the TrackedPoseDriver will fight each other for who changes the GameObject’s position. Create two new GameObjects for the TrackedPoseDrivers, remove the TrackedPoseDrivers from the GameObjects with the Rigidbodies, and attach the TrackedPoseDrivers to the newly created GameObjects. I called my new GameObjects Right and Left Controller, and I renamed my hands to Right and Left Hand.

Create a “PhysicsHand” script. The hand script will only do two things, match the velocity and angular velocity of the TrackedPoseDriver to the hand Rigibodies. Let’s start with position. Usually, it’s recommended that you not directly overwrite the velocity of a Rigidbody because it leads to unpredictable behavior. However, we need to do that here because we’re indirectly mapping the velocity of the player’s real hands to the VR Rigidbody hands. Thankfully, just matching velocity is not all that hard.

public class PhysicsHand : MonoBehaviour

{

public Transform trackedTransform = null;

public Rigidbody body = null;

public float positionStrength = 15;

void FixedUpdate()

{

var vel = (trackedTransform.position - body.position).normalized * positionStrength * Vector3.Distance(trackedTransform.position, body.position);

body.velocity = vel;

}

}Attach this component to the same one as the Rigidbodies, assign the appropriate Rigidbody to each, and assign the Right and Left Controller Transform reference to each as well. Make sure you turn off “Is Kinematic” on the Rigidbodies hit play. You should see something like this.

With this, we have movement! The hands track the controllers’ position but not the rotation, so it kind of feels like they’re floating in space. But they do respond to all collisions, and they cannot go into static colliders. What we’re doing here is getting the normalized Vector towards the tracked position by subtracting it from the Rigibody position. We’re adding a little bit of extra oomph to the tracking with position strength, and we’re weighing the velocity by the distance from the tracked position. One extra note, position strength works well at 15 if the hand’s mass is set to 1; if you make heavier or lighter hands, you’ll likely need to tune that number a little. Lastly, we could try other methods, like attempting to calculate the hands’ actual velocity from their current and previous positions. We could even use a joint between the Transform track and the controllers, but I personally find custom code easier to work with.

Next, we’ll do rotation, and unfortunately, the best solution I’ve found is more complex. In real-world engineering, one common way to iteratively change one value to match another is an algorithm called a PD controller or proportional derivative controller. Through some trial and error and following along with the implementations shown in this blog post, we can write another block of code that calculates how much torque to apply to the Rigidbodies to iteratively move them towards the hand’s rotation.

public class PhysicsHand : MonoBehaviour { public Transform trackedTransform = null; public Rigidbody body = null;public float positionStrength = 20; public float rotationStrength = 30;

void FixedUpdate()

{

var vel = (trackedTransform.position - body.position).normalized * positionStrength * Vector3.Distance(trackedTransform.position, body.position);

body.velocity = vel;float kp = (6f * rotationStrength) * (6f * rotationStrength) * 0.25f;

float kd = 4.5f * rotationStrength;

Vector3 x;

float xMag;

Quaternion q = trackedTransform.rotation * Quaternion.Inverse(transform.rotation);

q.ToAngleAxis(out xMag, out x);

x.Normalize();

x *= Mathf.Deg2Rad;

Vector3 pidv = kp * x * xMag - kd * body.angularVelocity;

Quaternion rotInertia2World = body.inertiaTensorRotation * transform.rotation;

pidv = Quaternion.Inverse(rotInertia2World) * pidv;

pidv.Scale(body.inertiaTensor);

pidv = rotInertia2World * pidv;

body.AddTorque(pidv);

}

}

With the exception of the KP and KD values, which I simplified the calculation for, this code is largely the same as the original author wrote it. The author also has implementations for using a PD controller to track position, but I had trouble fine-tuning their implementation. At Amebous Labs, we use the simpler method shown here, but using a PD controller is likely possible with more work.

Now, if you run this code, you’ll find that it mostly works, but the Rigidbody vibrates at certain angles. This problem plagues PD controllers: without good tuning, they oscillate around their target value. We could spend time fine-tuning our PD controller, but I find it easier to simply assign Transform values once they’re below a certain threshold. In fact, I’d recommend doing that for both position and rotation, partially because you’ll eventually realize you’ll need to snap the Rigidbody back in case it gets stuck somewhere. Let’s just resolve all three cases at once.

public class PhysicsHand : MonoBehaviour

{

public Transform trackedTransform = null;

public Rigidbody body = null;

public float positionStrength = 20;

public float positionThreshold = 0.005f;

public float maxDistance = 1f;

public float rotationStrength = 30;

public float rotationThreshold = 10f;

void FixedUpdate()

{

var distance = Vector3.Distance(trackedTransform.position, body.position);

if (distance > maxDistance || distance < positionThreshold)

{

body.MovePosition(trackedTransform.position);

}

else

{

var vel = (trackedTransform.position - body.position).normalized * positionStrength * distance;

body.velocity = vel;

}

float angleDistance = Quaternion.Angle(body.rotation, trackedTransform.rotation);

if (angleDistance < rotationThreshold)

{

body.MoveRotation(trackedTransform.rotation);

}

else

{

float kp = (6f * rotationStrength) * (6f * rotationStrength) * 0.25f;

float kd = 4.5f * rotationStrength;

Vector3 x;

float xMag;

Quaternion q = trackedTransform.rotation * Quaternion.Inverse(transform.rotation);

q.ToAngleAxis(out xMag, out x);

x.Normalize();

x *= Mathf.Deg2Rad;

Vector3 pidv = kp * x * xMag - kd * body.angularVelocity;

Quaternion rotInertia2World = body.inertiaTensorRotation * transform.rotation;

pidv = Quaternion.Inverse(rotInertia2World) * pidv;

pidv.Scale(body.inertiaTensor);

pidv = rotInertia2World * pidv;

body.AddTorque(pidv);

}

}

}

With that, our PhysicsHand is complete! Clearly, a lot more could go into this implementation, and this is only the first step when creating physics-based hands in VR. However, it’s the portion that I had a lot of trouble working out, and I hope it helps your VR development.

Written by Pierce McBride

If you found this blog helpful, please feel free to share and to follow us @LoamGame on Twitter!