GDC 2022: DAY 1

Written by Nick Foster

Future Realities Summit: How NASA Has Translated Aerospace Research into Biofeedback Game Experiences

It was fascinating to see how NASA’s advanced biofeedback device research has been integrated into games. As the technology advanced, biofeedback was used to do psychophysiological modeling in virtual reality. The ability to track one’s heart rate and frontal alpha has produced more effective conflict de-escalation training, leveraging biocybernetic adaptation. Applications utilizing this technology have also helped researchers further understand the brain functioning of individuals with autism and ADHD.

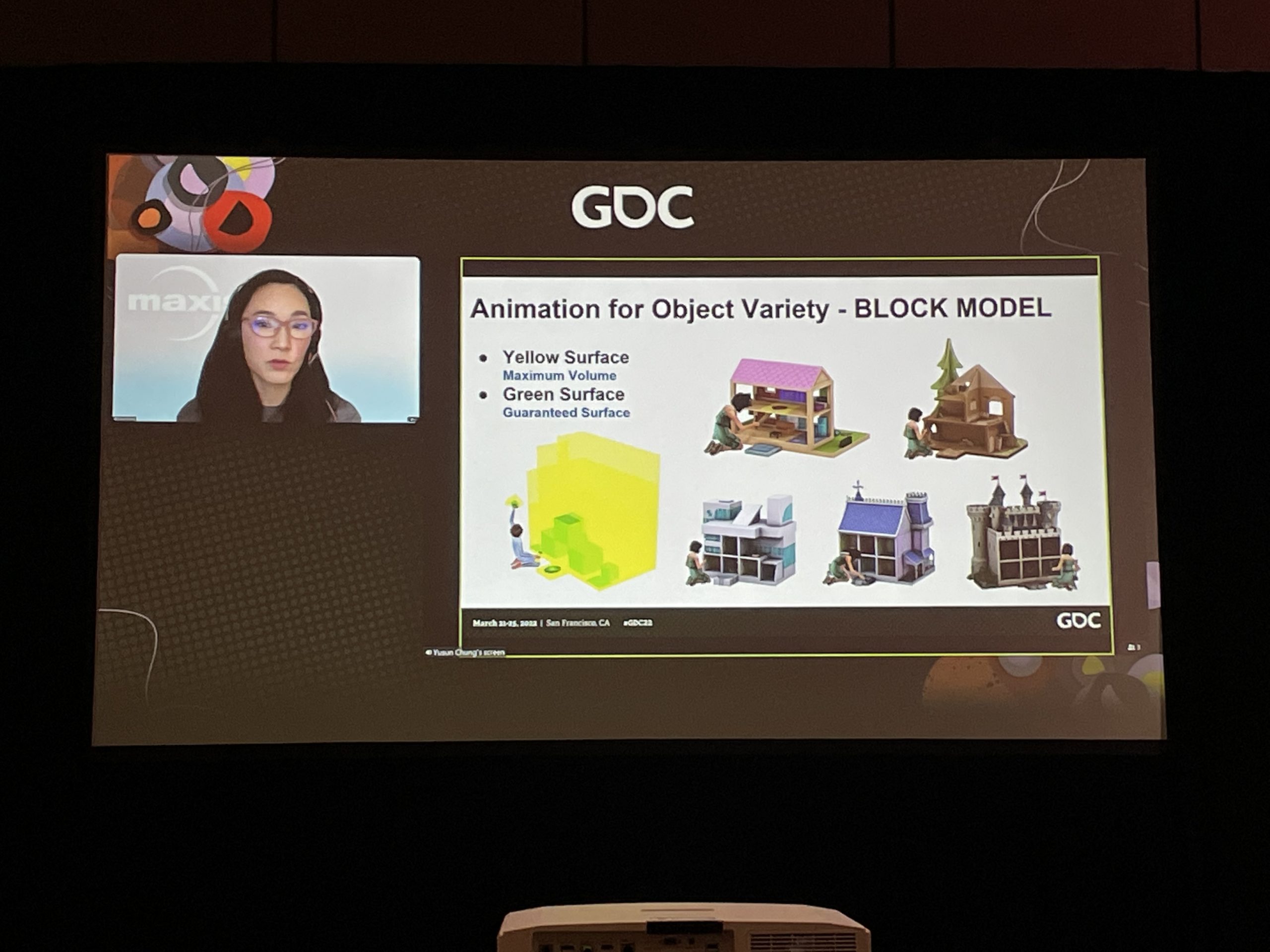

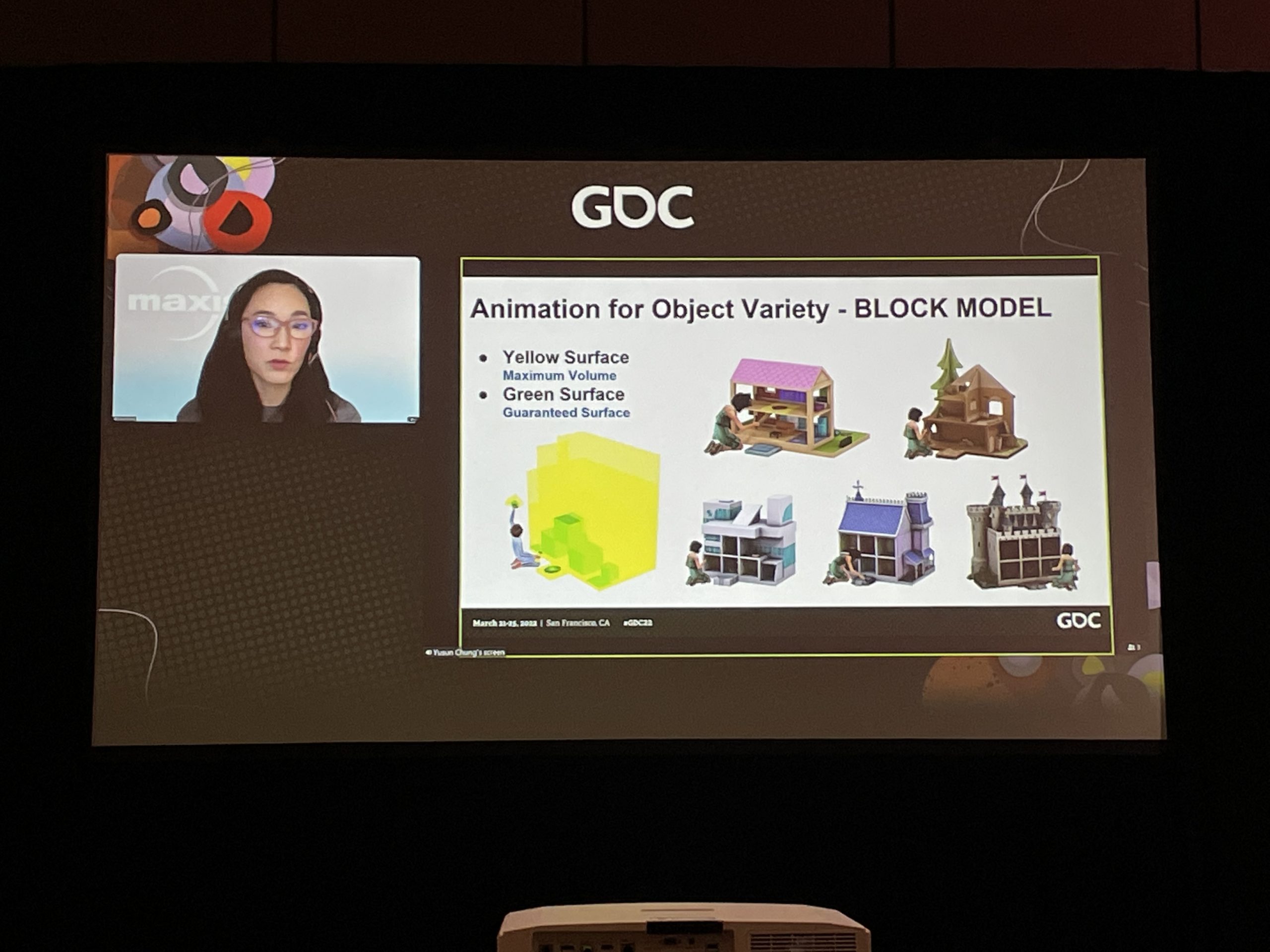

Animation Summit: Animation and Customization in ‘The Sims 4’

Yusun Chung (Lead Animator, Electronic Arts) analyzes aesthetic considerations and implementation workflows necessary to create animations for customizable characters, clothing, and interactable objects.

Pierce and I attended a great session covering the animation and customization in ‘The Sims 4’. This was especially of interest due to our future goals of implementing customization in Loam Sandbox. It was helpful to learn about the importance of object variety when an asset type must feature animations or interactivity. By setting parameters for artists, an asset’s core interactivity can stay the same for variants while the outer shell can be as creative as the artist wants.

Future Realities Summit: Up Close & Virtual – The Power of Live VR Actors

Alex Coulombe (Creative Director, Agile Lens: Immersive Design) details the techniques, tools, and technology he uses to create compelling VR shows.

I attended a panel called Up Close & Virtual presented by the CEO of Heavenue, Alex Coulombe. He discussed his company’s experience creating a live VR theater performance. In his performance of the Christmas Carol, various techniques and technologies combine to create a one-man show for audiences to enjoy in VR. I was absolutely impressed by the idea and I wouldn’t mind exploring more of it in the future. We could be seeing the creation of a new type of theater that is solely unique to VR!

Honestly, talking to fellow developers throughout the day has been among the real treats of GDC. I’ve met several VR developers, and it’s interesting to hear their opinions and thoughts on game development and existing experiences for VR platforms such as PSVR, Oculus/Meta, and Vive.

Future Realities Summit: Hands Best: How AR & VR Devs Can Make the Most of Hand Tracking

Brian Schwab (Director of Interaction & Creative Play Lab, LEGO Group) and John Blau (Game Designer, Schell Games) share their philosophies for designing hand tracking in VR.

VR game development forces you to reframe the way you think about your hands and the many ways you use them to interact. It was interesting to hear John and Brian refer to hands as systems through which we attain information about the things around us by reaching out and touching or gauging distance.

There are so many different factors to keep in mind when designing and implementing hand tracking for your VR apps such as user comfort, cultural differences, and the tracking complexity of your experience. However, as a general rule of thumb, developers should first identify the type of experience they want to create before they begin designing any hand tracking for it. Once you’ve realized this, choosing a combination of interaction models to match your goals can become a less overwhelming process.

Visual Effects Summit: How to (Not) Create Textures for VFX

Simon Trümpler emphasizes how using photos and third-party assets saves time when making textures.

Many artists feel like creating new textures for visual effects using existing photos and third-party assets is cheating, but Simon dispelled these common beliefs and showed us how we can take a base asset and customize it in a way that makes it our own. His advice was to focus on getting assets to the “good enough” stage because, if you find additional time later on in the game development process, then you can fine-tune them to have increasingly customized looks.

One of my favorite tricks he demonstrated was taking a picture of a forest, flipping it upside down, bumping up the contrast, then combining it with other water effects he had already created. The result was a waterfall that appeared to have ripples and movement. Had he painted each line by hand, it would have taken significantly longer to create. He wrapped up the talk by encouraging artists to share their expertise and learnings on social media using #VFXTexture to streamline the texture creation process for fellow artists.